- Today

- Total

hye-log

[부스트캠프 AI Tech]WEEK 07_DAY 29 본문

🥪 개별학습

[8] Training & Inference 2 - Process

1. Training Process

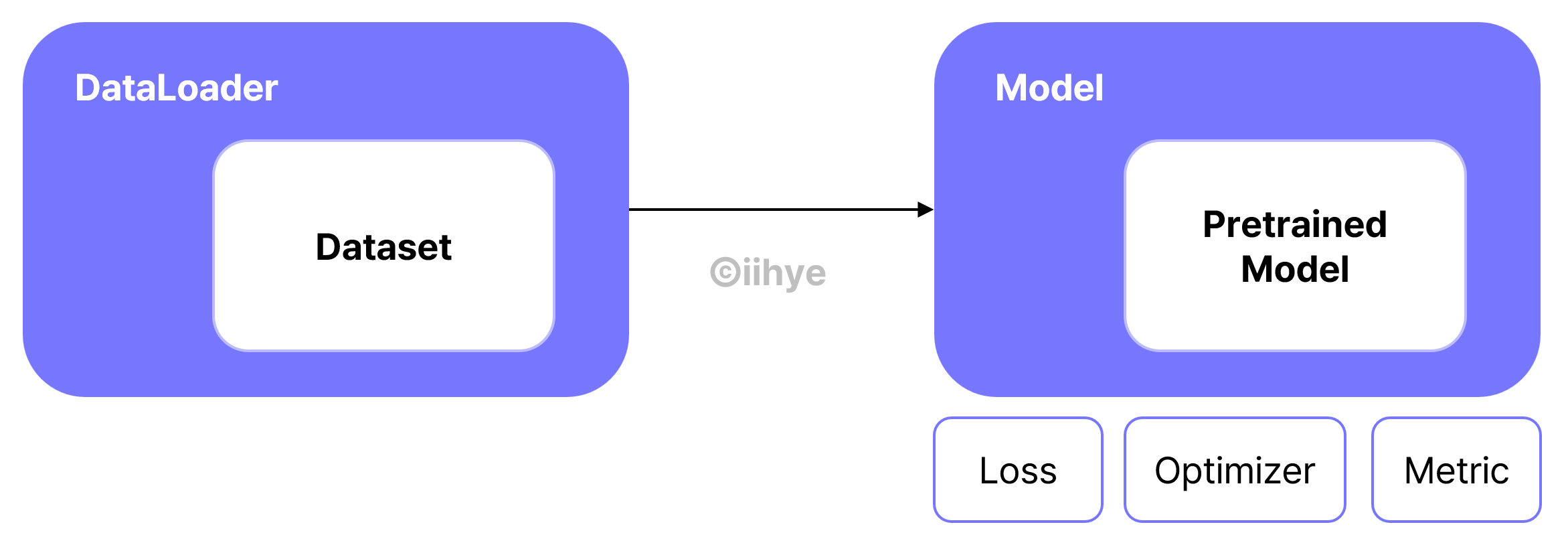

1) Training 준비

2) Training 프로세스의 이해

- train : train mode에서 Dropout, BatchNorm 등에 영향을 끼칠 수 있기 때문에 mode 설정이 필요

train(mode=True)- optimizer.zero_grad() : optimizer가 backward 될 때 model 안에 있는 parameter를 업데이트하는데, batch iteration이 돌아가면서 zero_grad를 통해서 이전 batch의 grad가 남아있을 수 있기 때문에 초기화가 필요함

- loss : criterion라는 loss 함수를 통해서 outputs, labels를 넣고 loss를 구함

loss = criterion(outputs, labels)

loss.backward()- optimizer.step() : optimizer가 input으로 받은 parameter의 gradient가 update 되어 있으므로 실제 데이터에 반영함

optimizer.step()

2. Inference Process

1) Inference 프로세스의 이해

- model.eval() : evaluation mode로 train을 False 한 것과 같음

eval()- with torch.no_grad() : with 문 안에 있는 gradient의 enable를 False로 만들어 줌

🥪 오늘의 회고

7주차의 첫 날이자 10월의 마지막 날! 데일리 스크럼 때 나온 새로운 모델을 시도해보려고 설치하다가 뭔가.. 알 수 없는 에러가 발생해서 서버를 초기화시켰다... 그러는 바람에 코드 다시 정리하고 파일들 다운로드 받고 서버 다시 열고.. 오전부터 정신 없는 하루였다..+_+ schedular 넣어보려고 이것저것 찾아보다가 잘 안 되어서 피어세션 때 해결했다!! 아무래도 학습에 시간이 오래 걸리다보니까 여러 가지 실험에 제약이 생기는 게 아쉽기는 한데(ㅠㅠ) 그래도 대회 끝나기 전까지 최선을 다하는걸로!!!!!!!(파이팅)

'Boostcourse > AI Tech 4기' 카테고리의 다른 글

| [부스트캠프 AI Tech]WEEK 07_DAY 31 (0) | 2022.11.02 |

|---|---|

| [부스트캠프 AI Tech]WEEK 07_DAY 30 (0) | 2022.11.01 |

| [부스트캠프 AI Tech]WEEK 06_DAY 28 (0) | 2022.10.28 |

| [부스트캠프 AI Tech]WEEK 06_DAY 27 (0) | 2022.10.27 |

| [부스트캠프 AI Tech]WEEK 06_DAY 26 (0) | 2022.10.27 |