- Today

- Total

hye-log

[부스트캠프 AI Tech]WEEK 06_DAY 28 본문

🔥 개별학습

[7] Training & Inference 1 - Loss, Optimizer, Metric

1. Loss

1) Loss 함수 = Cost 함수 = Error 함수

2) nn.Loss 패키지에서 다양한 loss를 찾을 수 있음

3) loss.backward() : 파라미터의 grad 값이 업데이트 됨

4) 조금 특별한 loss

- Focal Loss : Class Imablance인 경우 맞출 확률이 높은 Class는 조금의 loss를, 맞출 확률이 낮은 Class는 loss를 높게 부여

- Label Smoothing Loss : Class target label을 one-hot이 아니라 soft 하게 표현해서 일반화 성능을 높임

2. Optimizer

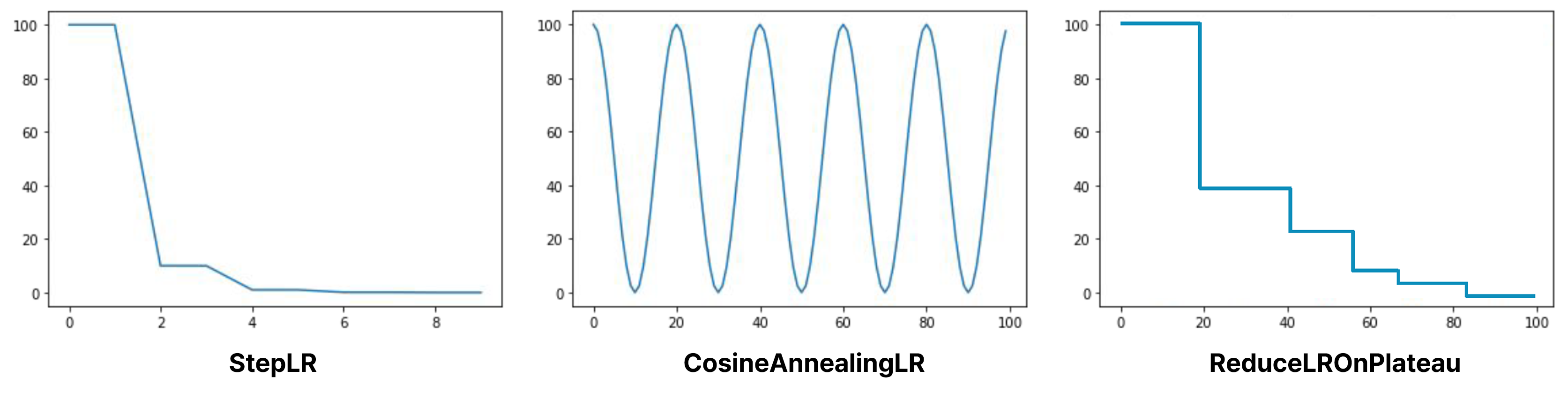

1) learning rate를 어느 방향으로 얼마나 움직일지 조정해 줌2) LR schedular : learning rate를 동적으로 움직이게 하는 방법- StepLR : 특정 Step 마다 LR 감소

schedular = torch.optim.lr_schedular.StepLR(optimizer, step_size=2, gamma=0.1)- CosineAnnealingLR : Cosine 함수 형태처럼 LR을 급격히 변경

schedular = torch.optim.lr_schedular.CosineAnnealingLR(optimizer, T_max=10, eta_min=0)- ReduceLROnPlateau : 더 이상 성능 향상이 없을 때 LR 감소

schedular = torch.optim.lr_schedular.ReduceLROnPlateau(optimizer, 'min')

3. Metric

1) 학습된 모델을 객관적으로 평가할 수 있는 지표가 필요2) 데이터 상태에 따라 적절한 metric을 선택해야 함- Accuracy : Class별로 밸런스가 적절하게 분포- F1-score : Class별 밸런스가 좋지 않아서 각 클래스 별로 성능을 잘 낼 수 있는지 확인 필요

🔥 오늘의 회고

오전에는 데일리 스크럼을 통해 어떤 실험을 할지 어떻게 성능을 올릴 수 있을지 논의했다. 특히 class imbalance 문제를 어떻게 해결야 하는지를 가장 깊게 고민한 것 같다. model 강의를 하나 들으면서 실험도 돌려봤다. optimizer도 바꿔보고 learning rate도 조절해보면서 다양한 실험을 해보았다. 제출은 해봤지만 아직 epoch가 적어서 그런지 metric에 큰 차이는 없었다. 피어세션 때에도 어떻게 성능을 올릴 수 있을지 고민해보고, 여러 가지 실험 방법들을 정리해봤다. 오늘은 금요일이라 팀 회고를 작성했는데 한 주 동안 빠르게 지나갔지만 그래도 잘했던 점과 아쉬웠던 점들을 정리하면서 이번 주를 회고했다. 다음 주는 경진대회 마지막이기도 하고, 이번 주에 헤맸던 것보다는 다음 주에는 더 잘 해낼 수 있을 거 같아서 기대된다!!

'Boostcourse > AI Tech 4기' 카테고리의 다른 글

| [부스트캠프 AI Tech]WEEK 07_DAY 30 (0) | 2022.11.01 |

|---|---|

| [부스트캠프 AI Tech]WEEK 07_DAY 29 (0) | 2022.11.01 |

| [부스트캠프 AI Tech]WEEK 06_DAY 27 (0) | 2022.10.27 |

| [부스트캠프 AI Tech]WEEK 06_DAY 26 (0) | 2022.10.27 |

| [부스트캠프 AI Tech]WEEK 06_DAY 25 (0) | 2022.10.25 |